As it is, the world is unfair. The question now is, do we want automated tech to be unfair too? As we build more and more AI-dependent smart digital infrastructure in our cities and beyond, we have pretty much overlooked the emerging character of artificial intelligence that would have a

profound bearing on our nature and future.

Are we happy with algorithms making decisions for us? Naturally, one would expect the algorithm to possess discretion.

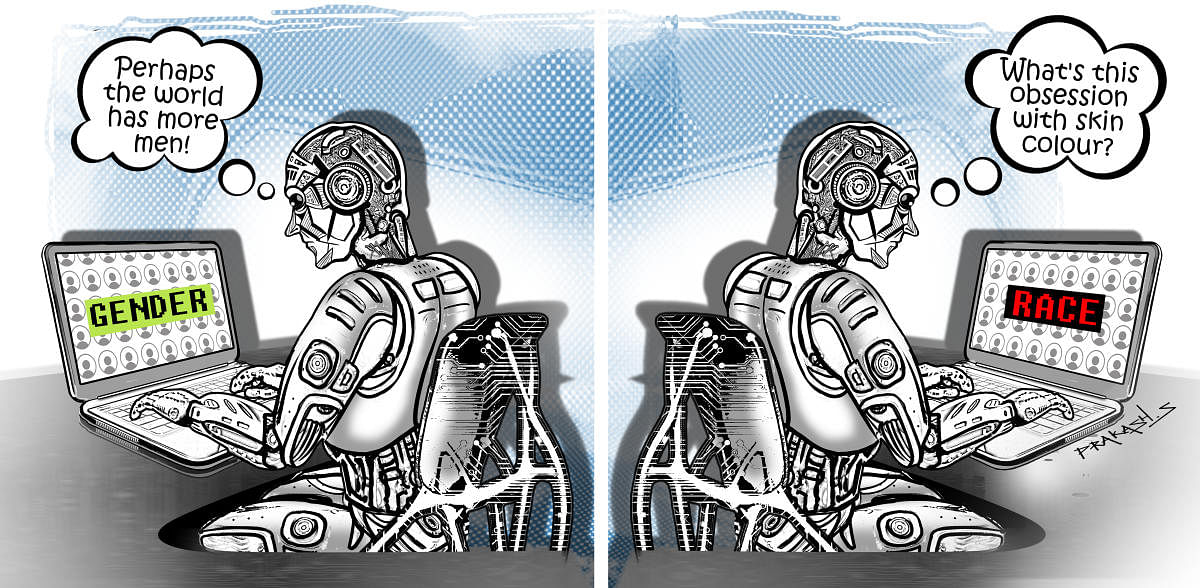

Herein lies the dilemma. Do you trust an AI algorithm? Though an algorithm can evolve over time drawing on the nature and accuracy of the dataset, it shall nevertheless pick up the prejudices and biases it is exposed to.

“Questions on fairness arise at multiple stages of AI design. For instance, who has access to large datasets? The private sector in India. There may not be data at all on marginalised communities while there can be excessive surveillance data on targeted communities. Historic biases in datasets add up: widely used leading datasets of word embeddings associate women as homemakers and men as computer programmers. Focus on FAT (Fairness, Accountability and Transparency) is crucial,” says Radhika Radhakrishnan, programme officer at The Centre for Internet and Society.

For example, a whopping 90% of the Wikipedia editors are men.

As AI is expected to add 15 trillion US dollars by 2030 to the global economy, at present, the data it relies upon comes from a few nations (45% from the US) while a major chunk of users are elsewhere. As it is vital to any social mechanism, diversity will be key if we are to reap the true benefits of AI. Or else, a non-diverse dataset or a programmer crafting an algorithm could chart the most unpleasant course.

“Research recommends the inclusion of social scientists in AI design and ensuring they have decision-making power. The AI Now Institute, for instance. However, there is a dearth of social scientists working on AI. In India, we ignore the social impact of AI in favour of the purely technical solutions of computer scientists. Lack of women, gender-queers, and individuals from under-represented communities reflects poor diversity within the AI industry,” Radhakrishnan points out.

The G20 adopted AI Principles in June, which stressed “AI actors should respect the rule of law, human rights and democratic values, throughout the AI system life-cycle. These include freedom, dignity and autonomy, privacy and data protection, non-discrimination and equality, diversity, fairness, social justice, and internationally recognised labour rights.”

The UK recently set up the Centre for Data Ethics and Innovation. Canada and France are spearheading the International Panel on Artificial Intelligence (IPAI) on the sidelines of the G7 summit. Meanwhile, India and France agreed on a slew of measures to advance cooperation on digital tech. Of course, the EU’s General Data Protection Regulation (GDPR) is a promising start.

All of that is well and welcome. But what such efforts and international bodies could achieve in reality is to be seen as questions loom large over private corporations that own tech exercising clout, henceforth leaving AI vulnerable to manipulation.

“To achieve this, panel members will need to be protected from direct or indirect lobbying by companies, pressure groups and governments — especially by those who regard ethics as a brake on innovation. That also means that panel members will need to be chosen for their expertise, not for which organisation they represent,” reads an August 21 editorial in Nature journal on IPAI.

Whatever one may do to de-bias AI, much damage is done already. Try a google search for images of hands. How many black/brown hands do you see? There you go.

“Whose needs are being reflected in AI — those of the poor or those of the big tech looking to ‘dump’ their products in an easily exploitable market? Instead of asking, what is the AI solution, we should be wondering, is an AI-based solution necessary in this case?” adds Radhakrishnan.

Where are the big tech located? In the United States. When a white male sitting in that country crafts an algorithm based on a bought dataset, for the benefit an aboriginal community in the Amazon, something’s amiss.

“Engineers and data scientists who design algorithms are often far removed from the socio-economic contexts of the people they are designing the tools for. So, they reproduce ideologies that are damaging. They end up reinforcing prejudices. Direct engagement is rare. Engineers should actively and carefully challenge their biases and assumptions by engaging meaningfully with communities to understand their histories and needs,” explains Radhakrishnan.

The march of AI cannot be stopped as more and more datasets get integrated. An ethical approach to computer science and engineering should begin from our institutions of excellence.

“Computer science and engineering disciplines at the undergraduate level teach AI as a purely technical subject, not as an interdisciplinary subject. Engineers should be trained in the social implications of the systems they design. Technology inevitably reflects its creators, conscious or not. Therefore, deeper attention to the social contexts of AI and the potential impact of such systems when applied to human populations should be incorporated to university curricula,” notes Radhakrishnan.