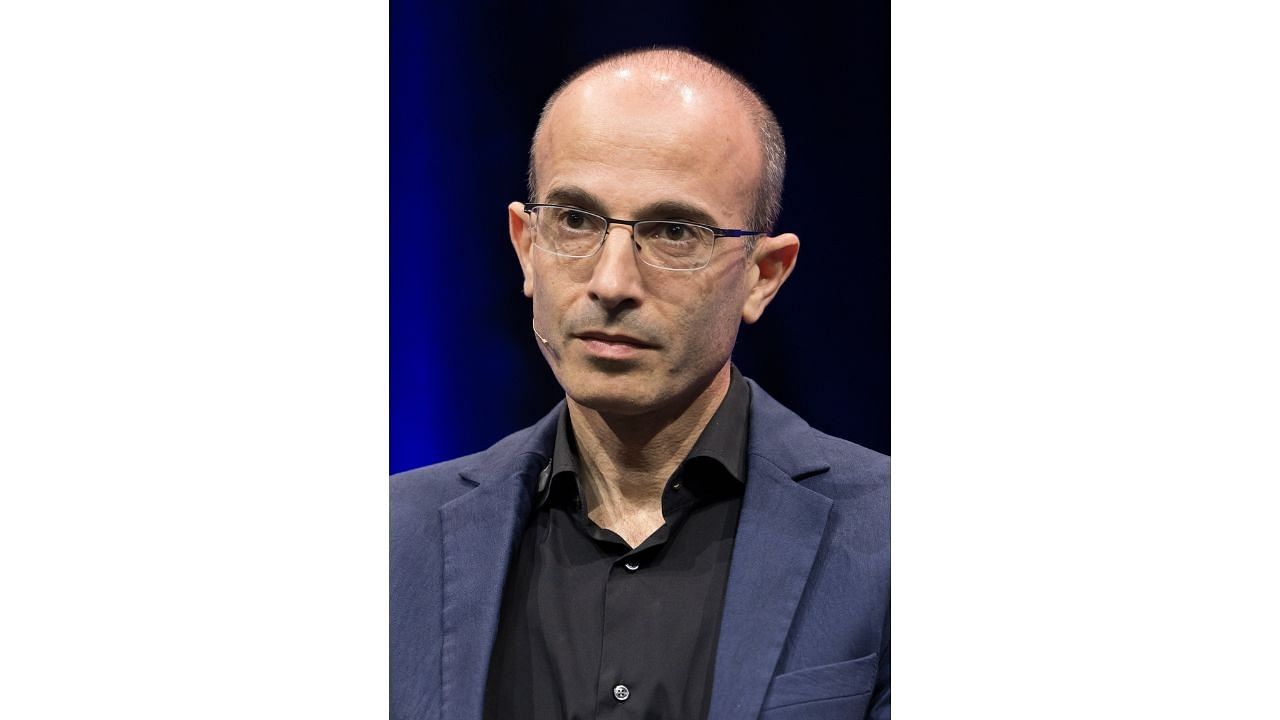

Yuval Noah Harari

Credit: Wikimedia

For those curious about how the world may change in a decade, or perhaps even five years, it is instructive to follow the global conversation around AI. Broadly, the discourse has three strands. One is the widespread laudatory view of AI’s transformative power — its game-changing potential to elevate diverse areas of our existence, such as healthcare, security, education, and research. Another is the contrarian take of public intellectuals like Daron Acemoglu, the 2024 Economics Nobel Prize winner and leading economic scholar of technology, who has pooh-poohed AI’s current capabilities and called them overrated. And then there’s the stance of people the Internet has dubbed “AI doomers” — the thinkers and experts who consider AI a truly destructive force, or humanity’s Frankenstein monster, if you will.

With his recently released book Nexus, the Israeli historian-philosopher and bestselling author Yuval Noah Harari has positioned himself among the doomsayers. Taking a long view of our political future in the age of AI, he comes to the frightening conclusion that unfettered AI growth could be perilous for liberal democracies and totalitarian states wouldn’t have a free ride either. To be sure, there’s common ground between most experts, across disciplines, about the very real and pressing short-to-medium-term existential risks of AI, namely that it is taking away jobs, perhaps in millions, exponentially amplifying misinformation, and creating systemic biases by increasingly becoming the only decision-maker in crucial real-world situations. However, “AI doomers” like Harari see a more cataclysmic outcome of the AI revolution — a technology so smart that it could get out of control and wreak havoc on humanity in unimaginable ways.

The doomers’ dire prognosis is nothing like sci-fi movie cliches, a la the computer overlord Skynet waging war on humans in the Terminator series. Instead, the doomer philosophy is based on what is commonly called the “alignment problem” in AI, or more specifically in Artificial General Intelligence (AGI), which is a branch of theoretical AI research focused on software with human-like intelligence and the ability to self-teach.

The alignment problem is the premise that to be dangerous, a superintelligent AI system doesn’t really need to be sentient, or ‘turn’ on its human masters and deploy killer robots. It will be destructive if its immediate goals are at odds, even in small ways, with what is actually in the interest of human beings. A good example is the world’s first reported case of a self-driving car killing a pedestrian. In Arizona, six years ago, an Uber test vehicle hit a woman pushing a bicycle across a road because its system was trained to view objects on the road only as other cars, trucks, cyclists, and pedestrians. A human being pushing a bicycle did not look like any of these. It’s easy to guess that on a much bigger scale, say in a supercomputer controlling a country’s air defence, the “alignment problem” could potentially start wars.

Double-edged sword

In Nexus, Harari keeps this “alignment problem” and a host of other AI problems — such as its lack of transparency and data privacy — central to his exploration of how AI could shape mankind’s political future. The starting point of the book is that invisible information networks comprising narratives, stories, and myths have shaped humanity all through history. They, be it oral traditions, cave paintings, primitive symbols, or religious texts, have helped foster belief systems and given a sense of identity to groups of people, creating complex societies. Sometimes, they have also been a debilitating force, like the use of fiction and propaganda in the Stalin and Nazi eras. Thus, information has always been a double-edged sword. And now that algorithms and AI are the hub of the modern-day information network, how does that work for us politically?

Harari postulates that at least on two key grounds, democracies seem vulnerable. As misinformation and bots increasingly dictate what we consume or perceive as news, “free public conversation on key issues”, a necessary condition for democracy, has been hampered and we are slipping into a sort of digital anarchy. Secondly, with more and more decision-making passing on to computers, democracies are losing something crucial to their existence — transparency, accountability and a self-correcting mechanism. After all, voters rarely understand the “alien intelligence” that is guiding every bit of their lives, so how can they challenge their human leaders.

Surprisingly, in Harari’s hypothesis, the AI-induced concentration of information would actually be a mixed bag for totalitarian regimes. While it would be easier for them to establish total surveillance systems and make any resistance impossible, they would be exposed to an alignment problem too. A surveillance system designed to create a completely totalitarian state would have so much power that it could sometimes act against the interests of the autocrat and make him vulnerable.

Nexus (Fern Press, 2024) by Yuval Noah Harari was published by Penguin recently.