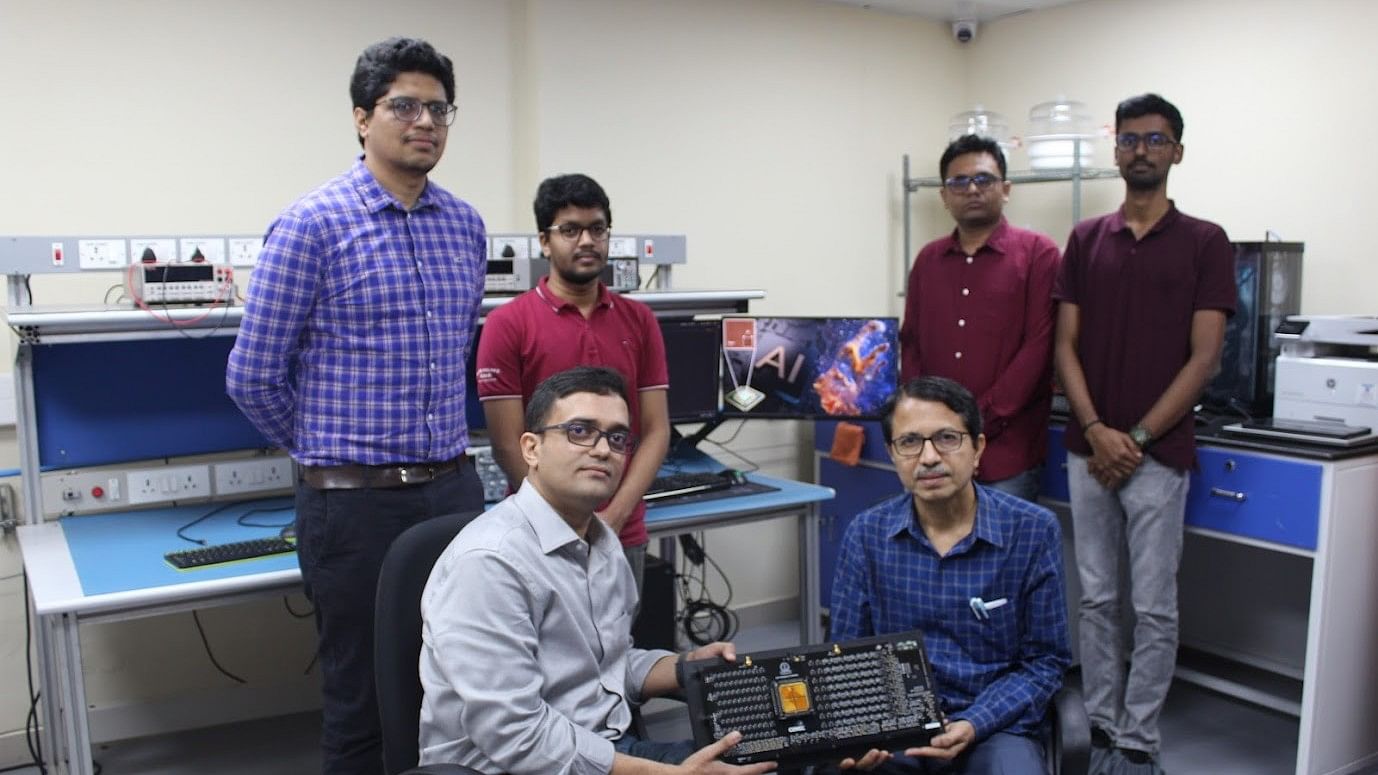

Prof Sreetosh Goswami (seated left), the Prinicipal Investigator, and Prof Navkanta Bhat, who headed the circuits and systems design for the project.

Others (standing, from left) in the picture are, Deepak Sharma, who performed the circuit and system design and electrical characterisations; Bidyabhusan Kundu, who tackled the mathematical modelling; Santi Prasad Rath, who handled the synthesis and fabrication; and, Harivignesh S, who crafted bio-inspired neuronal response behaviour.

Credit: DH Photo

Scientists at the IISc, Bengaluru, are reporting a momentous breakthrough in neuromorphic, or brain-inspired, computing technology that could potentially allow India to play in the global AI race currently underway and could also democratise the very landscape of AI computing drastically -- away from today’s ‘cloud computing’ model which requires large, energy-guzzling data centres and towards an ‘edge computing’ paradigm -- to your personal device, laptop or mobile phone.

The breakthrough, being reported today in the journal Nature, was achieved by a group of scientists and students led by Prof Sreetosh Goswami at the Centre for Nano Science and Engineering (CeNSe), IISC. What they have done essentially is to develop a type of semiconductor device called Memristor, but using a metal-organic film rather than conventional silicon-based technology. This material enables the Memristor to mimic the way the biological brain processes information using networks of neurons and synapses, rather than do it the way digital computers do. The ‘molecular mastermind’ behind the discovery was Sreetosh’s father, Prof Sreebrata Goswami, who is a visiting scientist at CeNSe.

The Memristor, when integrated with a conventional digital computer, enhances its energy and speed performance by hundreds of times, thus becoming an extremely energy-efficient ‘AI accelerator’. The silicon circuitry required for the team’s effort – a 64X64 memristor array integrated with a conventional 65 nm-node silicon processor -- was made by Prof Navkanta Bhat.

When eventually scaled up, the technology could enable the most large-scale and complex AI tasks – such as large language model (LLM) training – to be done on a laptop or smartphone, rather than requiring a data centre.

How

Today’s digital computing based on silicon transistor technology and the standard Von Neumann architecture – the Input-Storage/Memory-Output model of computing – is running up against processing speed and energy-efficiency limits, especially given the demanding requirements of Artificial Intelligence.

One part of the problem is due to the very nature of digital computing which relies on binary operations, needing to convert all information to be processed into 0s and 1s and breaking down large computational tasks -- the proverbial elephant to be eaten -- into small pieces.

The other part of the problem arises due to the fact that the processor and memory units are separated by a distance and data needs to constantly go back and forth between them for it to be processed, limiting computational speed and adding a hefty energy penalty.

The first problem has been dealt with so far by a brute force approach -- building processors that can perform the large number of steps or operations they need to perform at great speed. But the second problem – the separation between processor and memory -- is a bottleneck, one that has consequences for the whole landscape of computing. It is what causes the need for large amounts of processing power and, consequently, heating up of computing infrastructure.

As a result, if you have to build AI systems, which require feeding and processing enormous amounts of data into computers, they can only be done in the ‘cloud’, which is basically a lovely but misleading term for large data centres, which need massive amounts of energy to keep the computing infrastructure cool. It is estimated that if computing continues on this path, by 2050, the power needed to run AI data centres will have outstripped the world’s power generation capacity.

Computer chip-makers have taken an approach called In-Memory Computing -- integrating memory silicon with compute silicon -- to overcome these problems, but this has not made a significant dent as it still relies on silicon transistors and digital computing.

Companies such as IBM and China’s Tsinghua University have tried to emulate the brain using silicon technology with their True North and Tianjic chips, respectively, but that too hasn’t led to major gains.

That’s why the IISc breakthrough could be globally significant. Prof Sreetosh’s team took the metal-organic film approach to Memristors, which too is an In-Memory Computing approach but which actually works like the brain’s neuron-synapse circuit.

“Through the 2010s, large companies tried to mimic the brain while sticking to silicon transistors. They realised that they were not making significant gains. In the 2020s, the research investments are moving back to academia because there is a realisation that we need much more fundamental discoveries to actually achieve brain-inspired computing. If you just take a brute-force approach to use transistors and enforce certain algorithms, that’s not going to work,” Prof Sreetosh Goswami told DH.

Neuromorphic Computing

Our analog brain operates differently from a digital computer. Memory and processing are not discreet in the brain. Also, unlike digital computers, it does not process information as 0s and 1s, and it does not rely on breaking the proverbial elephant to be eaten into small pieces. Instead, it processes information by swallowing big chunks of data, reducing the number of steps required to get to the answer drastically. The brain is therefore extremely energy-efficient. The combination of analog computing and brain-mimicking Memristor technology makes neuromorphic computing fast and highly efficient.

The device developed by Prof Sreetosh Goswami and his team stored and processed data not in two states (0s and 1s) but in an astonishing 16,520 states at a time, reducing the number of steps required to multiply 64X64 matrices – the fundamental math behind AI algorithms is vector-matrix multiplication – to 64 steps, whereas a digital computer would perform the same work in 262,144 operations.

The team used the device, plugged into a regular desktop computer, to do some complex space image processing, recreating NASA’s iconic “Pillars of Creation” image from the data sent out by the James Webb telescope. It did so in a fraction of the time and energy that traditional computers would need. The team reports an energy efficiency of 4.1 tera-operations per second per watt (TOPS/W), “220 times more efficient than a NVIDIA K80 GPU, with considerable room for further improvements.”

The cutting-edge research, carried out with funding from the Ministry of Electronics and Information Technology (MEITY), also involved research students Deepak Sharma, who performed the circuit and system design and electrical characterisations; Santi Prasad Rath, who handled the synthesis and fabrication; Bidyabhusan Kundu, who tackled the mathematical modelling, and Harivignesh S, who crafted bio-inspired neuronal response behaviour. Their work was strengthened by collaborations with Prof Stanley Williams at Texas A&M University and Professor Damien Thompson at the University of Limerick.

"Neuromorphic computing has had its fair share of unsolved challenges for over a decade. When I wrote to the editors of Nature the first time to accept our submission, I listed six such challenges and said that it would be worth publishing if we solved even one of those challenges, but with our decade-long research and discovery, we have solved all six of them and almost nailed the prefect system”, Prof Sreetosh Goswami said.

Path Ahead

The team has so far demonstrated proof of concept and the integration of the molecular film Memristor technology with conventional digital systems to act as ‘AI accelerator’ with a 64X64 array. They intend to build larger arrays up to 256X256. MEITY’s funding is also meant to take the research all the way to develop and demonstrate a System-on-Chip solution and incubate a start-up to take it commercial. Prof Goswami expects to do so over the next three years. If the IISc team succeeds, India will have a potent horse in the global AI race.